More CouchDB readingbtree

Okay!

After diving into CouchDB source code and doing an in depth review of the btree:lookup, I am ready for the real challenge, figuring out how CouchDB writes to the btree. This is the really interesting part, from my point of view.

The heart of this functionality is the query_modify method. This method allow us to perform a batch of operations on the tree in one go. Following my own ideal that all remote APIs (and this is talking to disk, hence, remote) should support batching, I really like the idea.

The first part is dedicated to building the actions list. I am not going to go over that in detail, but I will say that this first sort things by key, and then by action.

So, for example, let us say that I want to perform the following actions:

query_modify(tree, {'c', 'a'},'a','a')

What will happen is that we will first query the current value of 'a', then we will remove it, and then we will insert it. Finally, we will query the value of 'c'. This is done in order to ensure good traversal performance of the tree, as well as to ensure consistency when performing multiple operations.

The part that really interest us is modify_node, which perform the actual work:

Now we are starting to see how things are really happening. We can see that if the root is null we create a value node, or we find the appropriate node if it is not. This follows what we seen in the lookup.

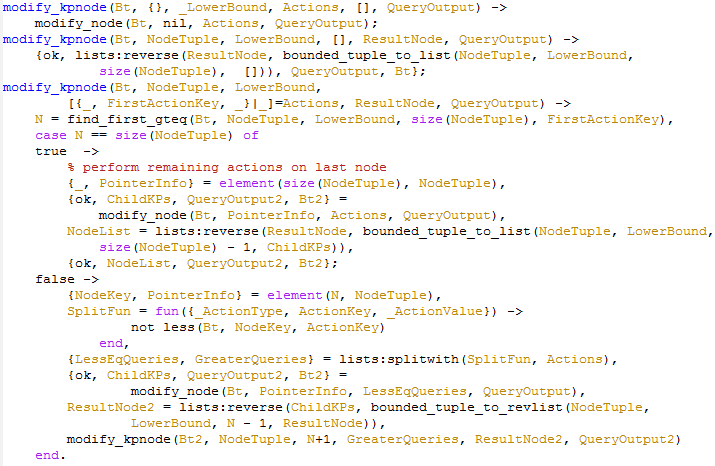

Moving on, we forward the call to modify_kpnode or modify_kvnode. I am going to touch kpnode first, since it is bound to be simpler (kvnode needs to handle splitting, I would assume).

This is far from trivial code, and I don't think that I understand it fully yet, but what it basically does is fairly simple. First the first node that match the current FirstActionKey, if this is the last node that we have to deal with, we call modify_node on it, accumulate the result and return it. If it is not the last node, we split the work, sending all that are less than or equal to the current FirstActionKey to be handled using modify_node (which is likely to be a key/value node and thus handled directly) and continue to handle the rest using modify_kpnode.

In a way, this is the exact reverse of how lookup_kvnode is handled.

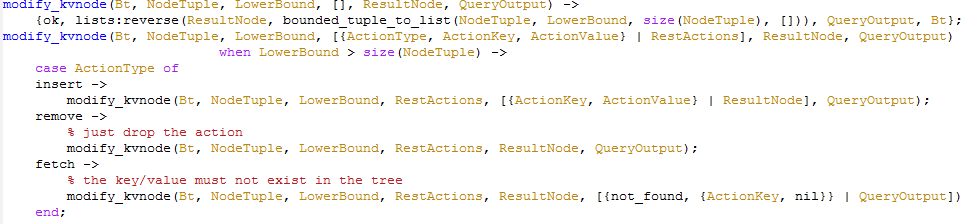

modify_kvnode is complex. I can't fit the functions on a single screen in a 1280x1024 resolution. I am going to split it into two sections. It is not ideal, since they are supposed to go together, but I'm sure you'll manage.

The first part is to handle the case where we have more actions to perform. In this case, we can simple return the results. The second is there to handle the case where we run out of the items in the node. Note how insert works, for example. You can see that the way that the btree works is the same in which Erlang does. That is, we will always rewrite the entire node, rather than modify it. remove is also interesting. If we got to this point and haven't found the node, it doesn't exist, so we can move on. Same for fetching.

Now let us see the more complex part, what happen if we do find the items in the value node?

Note that we have AccNode, which is our accumulator. We find the first node that match ActionKey, and then we take the NodeTuple and the AccNode and turn them into a reverse list. This copies all the items that are less than the current one to the ResultNode, those are the ones that we are not interested in, so we can just copy them as is.

The next part handles the actual adding/removing/fetching from the node. It is pretty self explanatory, I think, so I'll leave it at that.

So now we understand what modify_kvnode and modify_kpnode works. But there is nothing here about splitting nodes, which I find suspicious. Let us go back to modify_node and look what else is going on there:

Ah, note the handling of NewNodeList, there is probably where the money is.

We make a couple of tests. The first is to see if there are any nodes left, the second to see if we changed the node list (by comparing it to the old value). We don't care for any of those at the moment, so we will focus on the last one. write_node is called, and this is likely where we will see what we are looking for.

Well, this is simple enough, and chunkify seems like what we were looking for. However, It bothers me that we write all the nodes to disk. Is seems... wrong somehow. More specifically, since we are always appending, aren't we going to break the binary tree? There is also the reduce_node call that is hiding there, which we also need to check. It is being called after the node was written to disk, so I am not sure what is going on here.

Let us read chunkify first, and then deal with reduce_node.

Well... it looks like the chunkify function sucks. But anyway, what is going on there is fairly simple. We check if the list that we were passed is greater than CHUNK_THRESHOLD. This is set to 1279, for some strange reason that I can't follow. I assume that the reason for that is to ensure blocks of less than 1280, but that no sector size that I have heard of came in this size.

The second part is more interesting (and complex). OutputChunks is a list of lists of the elements that started in the InList. This piece of code is very short, but it does a tremendous amount of work.

And now we can move back to reduce_node, like I promised.

This is short, to the point, and interesting. The idea of rereduce is that when something has changed, you don't have to go and recalculate everything from scratch, you can take partial reduced results and the new values and combine them to produce the same result that you would have if you had reduced over the entire data set.

As you can see, calling reduce_node on a key pointer node will cause a re reduction, while on a value node, it just reduce after a map. I am assuming that the thought was that value nodes are small enough that there is no point in optimizing this.

There are a few interesting points that need to be raised, which I don't have answers for at the moment.

- Why is this happening after we write to file?

- How does this relate to CouchDB's ability to shell out to java script in order to perform maps and reductions?

- What ensure that the ordering of the node reduction match the tree hierarchy?

At any rate, this completes our examination of write_node and modify_node, we can now go back to where we started, query_modify:

We are nearly at the end. We have seen that the nodes are written to disk after being chunkified. Note that currently nothing actually have a reference to them at this point. We do have KeyPointers, but they aren't attached to anything. If we crashed right now (directly after modify_node), there is no state change as far as we are concerned, we just lost some disk space that we will recover in the next compaction.

I am pretty sure that complete_root is the one responsible for hooking everything together, so let us see what it does...

Yes, I expected complete_root to be complicated as well :-)

What is going on here is the actual missing piece. This is what takes all the value nodes and turn them into pointer nodes, and does so recursively until we finally get to the point where we only have a single value returned, which is the root node. There is also handling for no nodes, in which case the tree is empty.

Basically, what this means that that the way CouchDB is able to achieve ACID using a btree is by saving the modified tree structure to disk on each commit. Since it is only the modified part that is saved, and since btree structure are really small, it has no real storage penalty. Now I want to go and find what actually save the new root tree to disk, since query_modify does not modify the actual header (which means that in the case of a crash, nothing will change from the point of view of the system).

Right now I suspect that this is intentional, that this allows to combine even more actions into a single transaction, even beyond what you can do in a single query_modify. This is especially true since the common interface for those would usually be add, remove, etc.

As an interesting side effect, this is also how CouchDB is able to handle MVCC. Since everything is immutable, it can just hand a tree reference to the calling code and forget about it. No changes ever occur, so you get serializable isolation level by default. And, from what I can see, basically for free.

Going over the couch_httpd file, is seems that the main interface is couch_db, so I am heading that way... and I run into some very complex functions that I don't really feel like reading right now.

Hope this post doesn't contain too many mistakes...

Comments

Great posts! A few answers:

The ideal size of CHUNK_THRESHOLD was determined to be 0x4FF when timing some test runs on my MacBook Pro. Yup, it's all part of the chunkify function (chunking a large btree node into smaller nodes), which sucks :)

The reduction is stored in parent node. We write the node, reduce the node and then store the reduction results with the pointer in the parent node.

The btree code doesn't know about javascript, it is just given map and reduce functions to call. The view engine provide the map and reduce functions, and those functions then do the talking to external code.

The reduction functions are supposed to commutative and associative, so the order in which reduction results are calculated doesn't matter. I think that answers the question?

Comment preview